For the past couple weeks, Chén Yè and I have been prototyping an augmented reality table. Driven by an impending deadline for final-project proposals for a class an intrepid desire to push the frontiers of uncharted interfaces, we threw together a couple wooden boards, a webcam, projector, frosted acrylic and an unholy amount of tape into a video-screen table that knows what's on it, letting you interact with software by picking and placing down objects on a surface. Here's how we did it.

The Protoype

We started by laying out a few key features our prototype would have:

- An elevated, flat surface, because that's pretty much the minimum viable table

- The ability to "see" what's placed on it, using a camera somewhere, connected to a computer running some sort of image processing code

- The ability to provide visual feedback on the surface of the table

- Code to tie the object recognition and projection together—to let the objects on the table directly affect the image projected on its surface.

We deliberately put off answering a crucial question — what the software would actually do. We wanted to build a blank slate, a piece of hardware that could support all kinds of interesting programs.

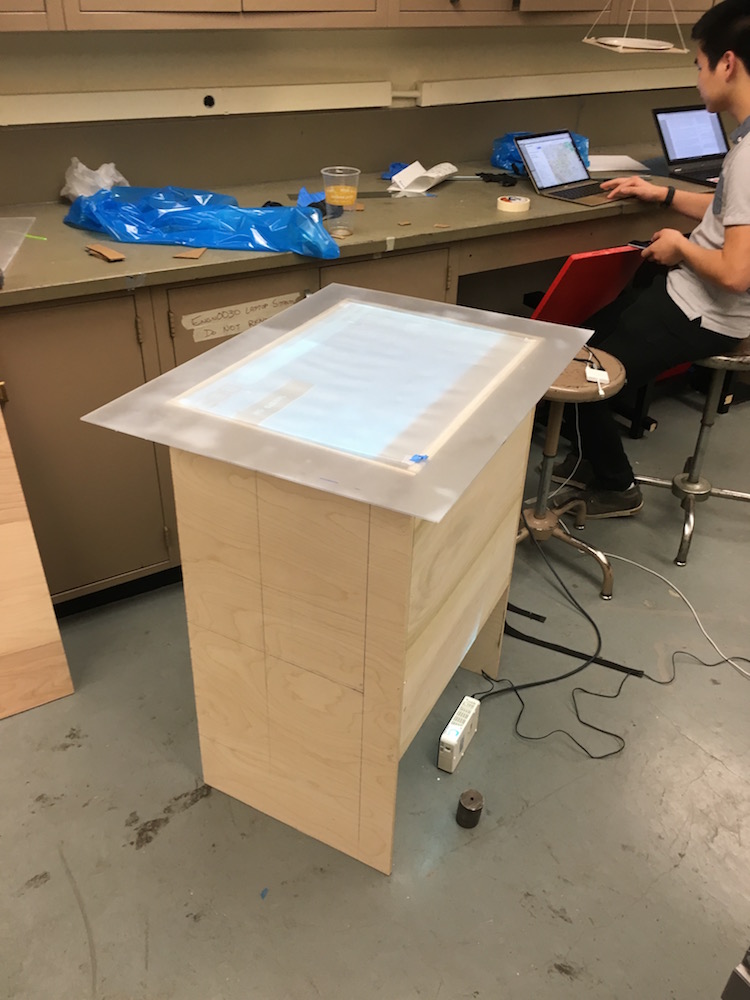

The Wood

As someone with zero furniture-building experience, the prospect of building an actual table was daunting, so I sketched out the simplest table I could imagine. Rather than four freestanding legs, we used four sides, two of which were shorter than the others, and didn't reach the floor. This made our table into a sort-of box, which did a good job of hiding some of the equipment inside. The fact that the long sides of the box didn't reach the floor made it seem more open and table-like, rather than some sort of mysterious column. It also allowed easy access to the bottom half of the table's inside, which was crucial for accessing the electronics inside.

It took only a couple hours of band-sawing and nervous nail-gunning to transform two large slabs of wood into a decidedly structurally unsound piece of furniture. Satisfied enough, we moved on to the next challenge — building the tabletop itself.

The Blurry-Surface Trick

Getting the table to recognize objects placed on it required a bit of cleverness. We considered placing a camera above the table, pointing down at its surface. This wasn't attractive because it'd involve assembling a separate piece of equipment outside the table. Instead, we chose to make the surface of the table transparent, with a camera below the surface, pointing up. This seemed like the right approach, but introduced a couple challenges:

- How do we discriminate what's on the table's surface from what's above it?

- How do we project an image on the table if the surface is transparent?

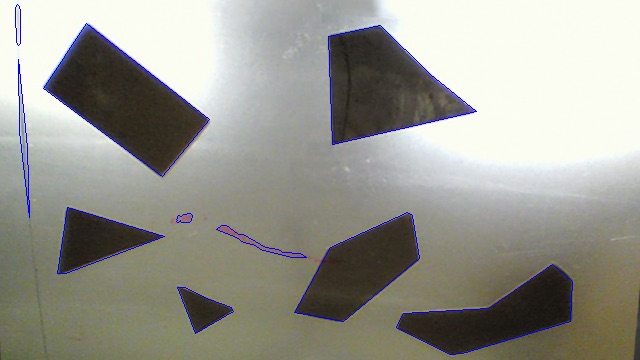

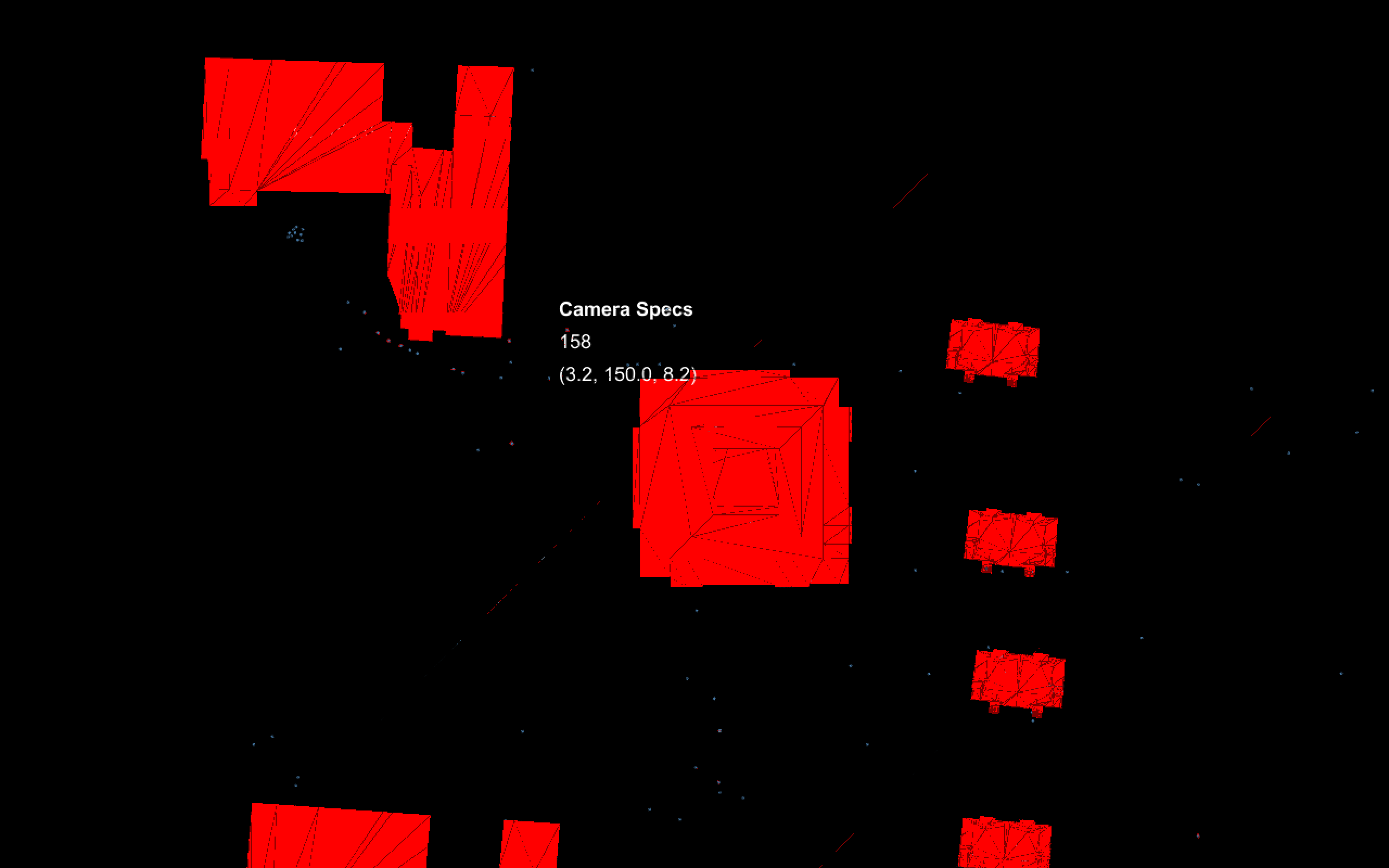

An early proof-of-concept photo of shapes atop a blurry surface.

We managed to solve both challenges with a single solution: giving the table a blurry surface. A frosted transparent surface would allow us to back-project an image onto it from below; it would also cause everything behind to table to appear blurry in the camera's image, except the sillhouette of anything placed directly on the surface.

We cut out a sheet of acrylic plastic using a band saw and used some art-store-bought "glass-frosting spray" on it. Do this outside. We bought an wide-angle webcam, taped it inside the table, and placed the plastic surface on top. It worked.

Recognizing Objects

We processed the webcam's imagery using OpenCV running on a MacBook, training the software to recognize the footprints of a large set of pre-selected objects. I could write a whole post on how the code worked—but that time would probably be better spent actually making it work better. Here's a rough overview of the pipeline:

Images are captured by a webcam inside the table and loaded into a Python program.

The images are warped about the corners to produce a flat image that doesn't contain the sides of the table. The positions of each corner are hard-coded during calibration.

Images are converted to grayscale; the algorithm doesn't care about color at all.

Images are blurred to get rid of random specs of noise.

Images are passed through an adaptive threshold filter, which turns pixels entirely black if they're darker than the average of surrounding pixels, and white otherwise.

Thresholding is necessary for the next step: tracing the contours (outlines) of the shapes.

Various metrics are computed for each contour; shapes are identified by finding the closest-matching set of sample contours that have manually been labelled during calibration.

Applications can make requests to the image-recognition system—running as a local web server—and receive JSON data containing the position, size and type of each detected object.

Projecting an image

Thanks to the frosted-acrylic tabletop, we can rear-project images straight from the computer onto the surface of the table. The key to success here is to get a projector with a low throw ratio — a projector that produces a large image without being very far from the surface. Our projector had a throw ratio of about 1.3, which meant that it had to be on the floor for it to project across the full area of our table. Of course, this entirely depends on the dimensions of your table—ideally, we'd have a super low throw ratio so we could have a large surface without making an unreasonably high table. Because our projector was completely on the ground, we didn't bother attaching it to our table in the final iteration, though that's something we would've liked to do.

Thanks to the frosted-acrylic tabletop, we can rear-project images straight from the computer onto the surface of the table. The key to success here is to get a projector with a low throw ratio — a projector that produces a large image without being very far from the surface. Our projector had a throw ratio of about 1.3, which meant that it had to be on the floor for it to project across the full area of our table. Of course, this entirely depends on the dimensions of your table—ideally, we'd have a super low throw ratio so we could have a large surface without making an unreasonably high table. Because our projector was completely on the ground, we didn't bother attaching it to our table in the final iteration, though that's something we would've liked to do.

Building Experiences

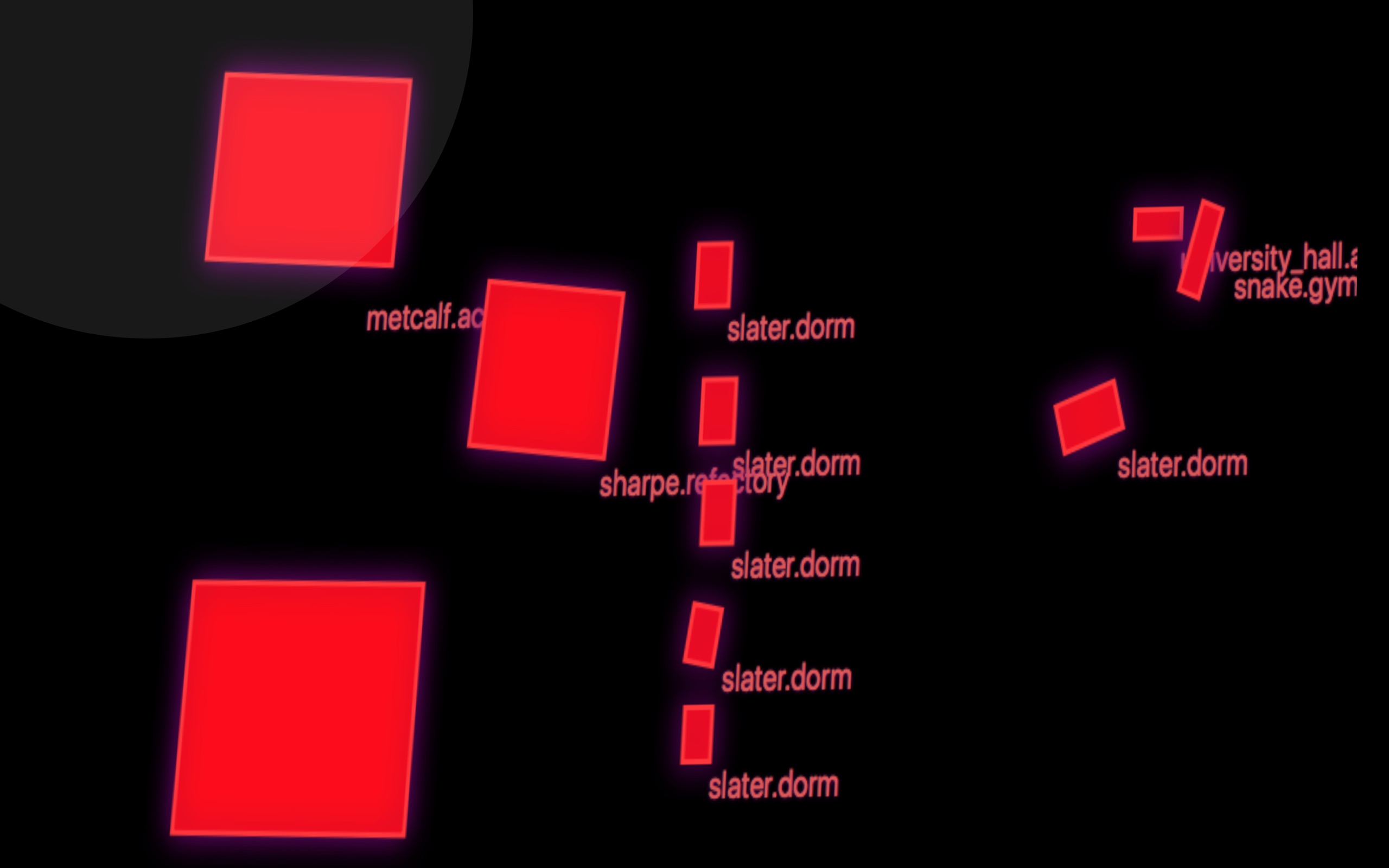

The fun part of the iPhone isn't the revolutionary multitouch display or the A7 system-on-a-chip — it's the incredible apps it enables people to build. For our demo, we had two "apps" to show off. The first was a rather boring HTML5 canvas demo that drew outlines and labels around every object you threw on it. It was an exciting tech demo, but it wasn't that interesting otherwise.

The fun part of the iPhone isn't the revolutionary multitouch display or the A7 system-on-a-chip — it's the incredible apps it enables people to build. For our demo, we had two "apps" to show off. The first was a rather boring HTML5 canvas demo that drew outlines and labels around every object you threw on it. It was an exciting tech demo, but it wasn't that interesting otherwise.

Far more interesting was Chén's app: a functional simulation of Brown University. We 3D-printed noteworthy campus buildings (and laser-cut sillhouettes of the rest in cardboard) and built an agent simulation of students inhabiting a campus that players create by placing buildings onto the table. Students have dorms and classes, and they need food and exercise — the player's goal is to place buildings optimally to maximize students' happiness.

Simulations like that are just the tip of the iceberg — we've brainstormed tons of ideas for applications that'd benefit from the table's unique interaction model:

To reduce it to a dull principle, the table's interface seems ideal for any application where users add and remove objects of varying types and alter their parameters.

Chén wrote his own article about this, which explores the creative aspirations and possible applications of our project in more depth.

Next Steps

We're both super excited about the potential for tables like this — we think that with enough work, we'll be able to build a much more solid table, in the physical and software sense. Stay tuned!